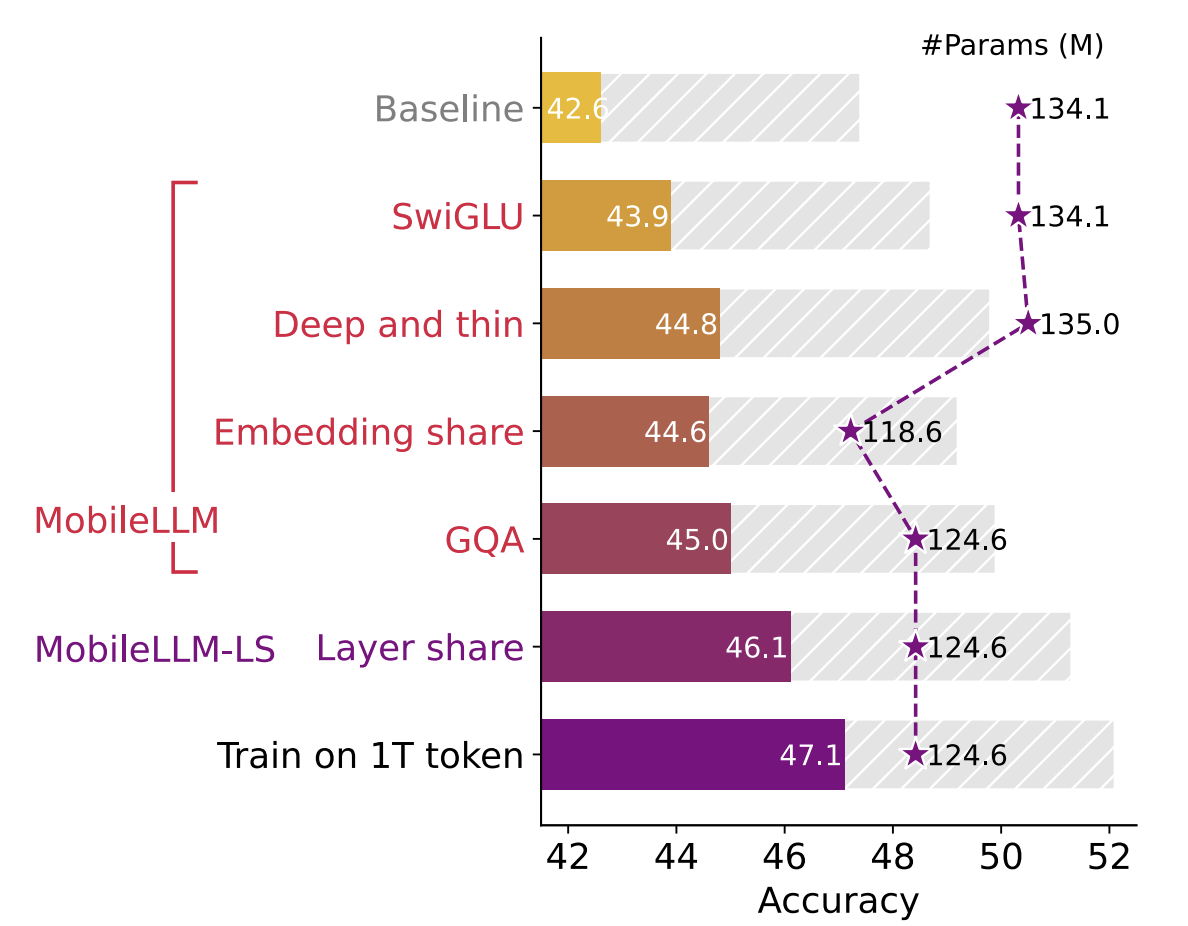

MobileLLM: Optimizing Sub-billion Parameter Language Models for On-Device Use Caseshttps://arxiv.org/abs/2402.14905 MobileLLM: Optimizing Sub-billion Parameter Language Models for On-Device Use CasesThis paper addresses the growing need for efficient large language models (LLMs) on mobile devices, driven by increasing cloud costs and latency concerns. We focus on designing top-quality LLMs with ..